How to Optimize Your E-commerce Operations with Product Catalog Data Scraping?

Introduction

In the fast-paced world of e-commerce, staying competitive requires efficient data management and real-time insights. Product catalog data scraping offers a powerful way to streamline operations, gain market insights, and enhance decision-making processes. This comprehensive guide will delve into the benefits, techniques, and ethical considerations of e-commerce data scraping, particularly focusing on product catalog data collection.

Why Scrape Product Catalog Data?

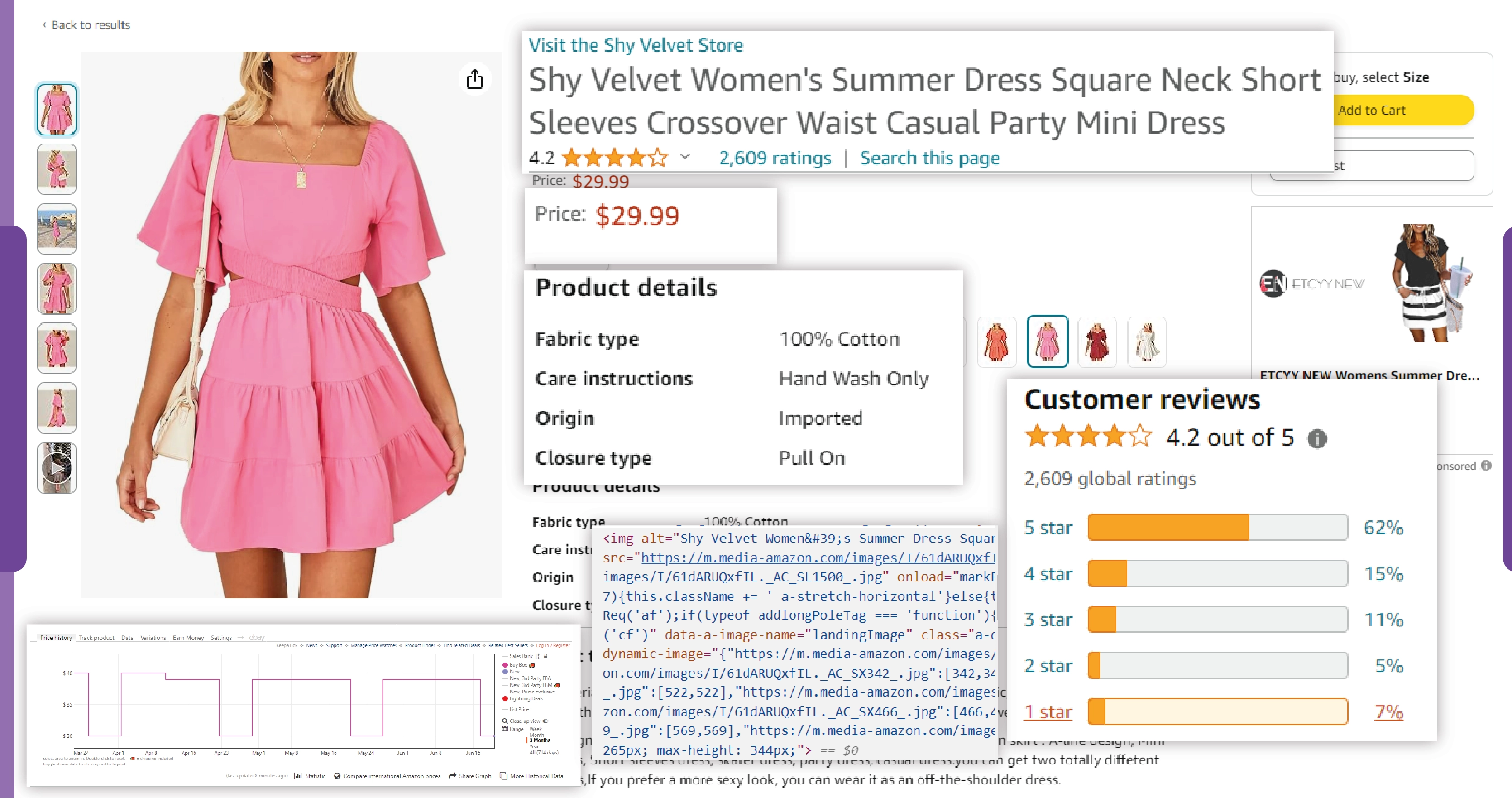

1. Enhanced Market Research

Product catalog data scraping allows businesses to gather comprehensive information on competitors’ product offerings, prices, and promotional strategies. This data is invaluable for conducting market research, identifying trends, and understanding consumer preferences. To scrape e-commerce data, businesses can make informed decisions about their product lines, pricing strategies, and marketing campaigns.

2. Price Comparison

Scraping product catalog data from multiple e-commerce platforms enables businesses to perform effective price comparisons. By analyzing competitors’ prices, companies can adjust their pricing strategies to remain competitive while maximizing profits. This real-time price monitoring ensures that businesses can respond swiftly to market changes and maintain a competitive edge.

3. Inventory Management

Accurate and up-to-date product catalog data is essential for efficient inventory management. By collecting data on stock levels, product turnover rates, and demand patterns, businesses can optimize their inventory, reduce stockouts, and minimize overstock situations. This leads to better cash flow management and improved customer satisfaction.

4. Improved Customer Experience

Understanding customer preferences and buying behavior is crucial for providing a superior shopping experience. Product catalog data scraping helps businesses analyze customer reviews, ratings, and purchase history. This data can be used to personalize product recommendations, tailor marketing efforts, and enhance overall customer satisfaction.

5. Competitive Benchmarking

Regular e-commerce data collection allows businesses to benchmark their performance against competitors. By comparing product offerings, prices, and customer feedback, companies can identify areas for improvement and opportunities for differentiation. This competitive intelligence is vital for staying ahead in a crowded market.

Setting Up Your Data Scraping Environment

Tools and Technologies

To get started with product catalog data scraping, you’ll need the following tools and technologies:

Python: A versatile programming language widely used for web scraping.

Libraries: BeautifulSoup, Requests, Selenium, and Scrapy.

IDE: An Integrated Development Environment such as PyCharm or VSCode.

Proxy Servers: To avoid IP blocking and ensure continuous data scraping.

Storage: Databases (e.g., MySQL, MongoDB) or file formats (e.g., CSV, JSON) to store the scraped data.

Installing Required Libraries

First, ensure Python is installed on your system. You can download it from python.org.

Next, install the necessary libraries using pip:

pip install requests beautifulsoup4 selenium scrapy

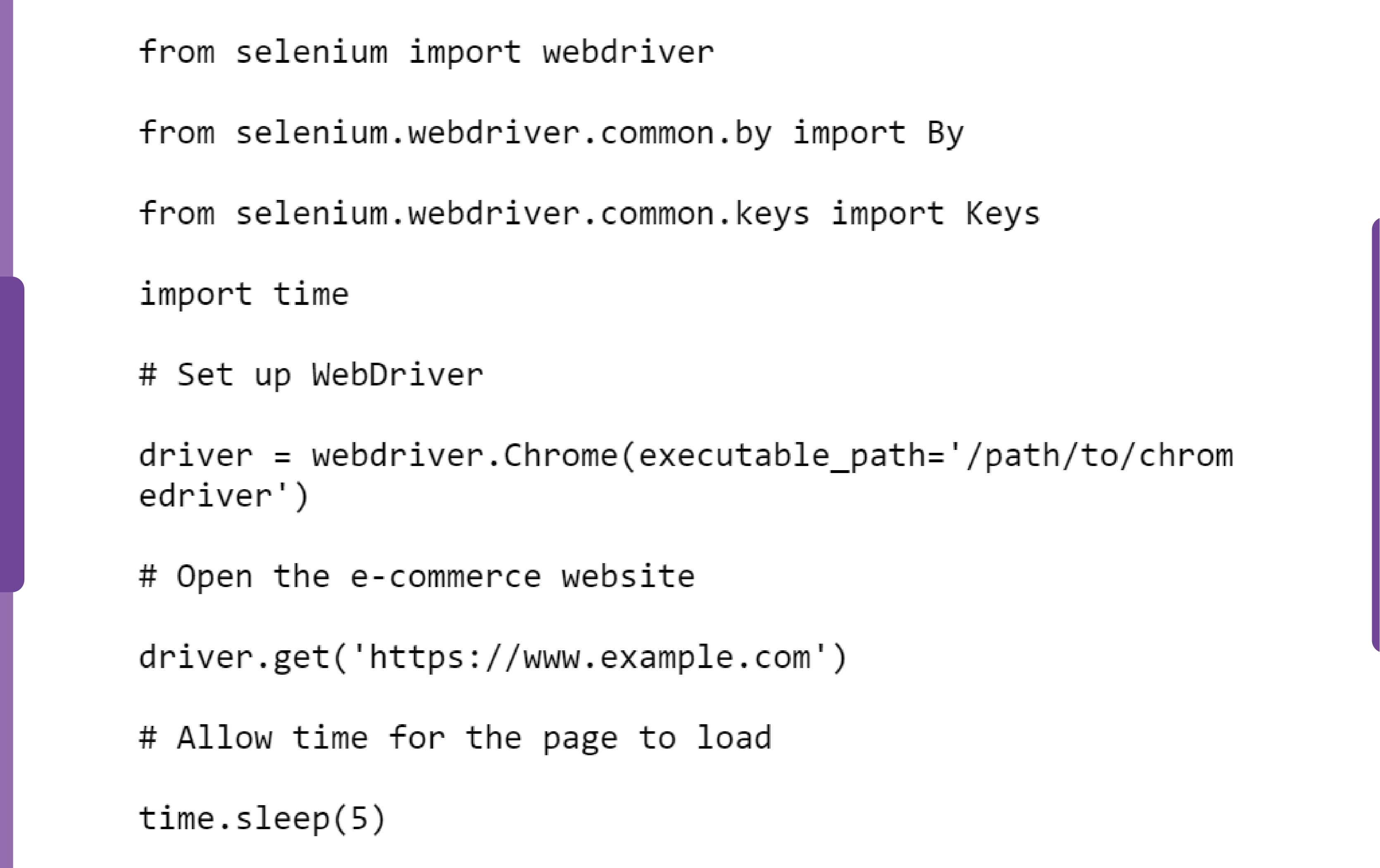

Setting Up WebDriver for Selenium

For dynamic content scraping, Selenium WebDriver is essential. Download the WebDriver compatible with your browser. For Chrome, you can get it from ChromeDriver.

Place the WebDriver executable in a directory included in your system's PATH.

Scraping Static Product Catalog Data

Step-by-Step Guide

1. Inspecting the Web Page

Open the target e-commerce website in your browser. Use the browser's developer tools (F12) to inspect the elements you want to scrape. Identify the HTML tags and classes associated with the product data.

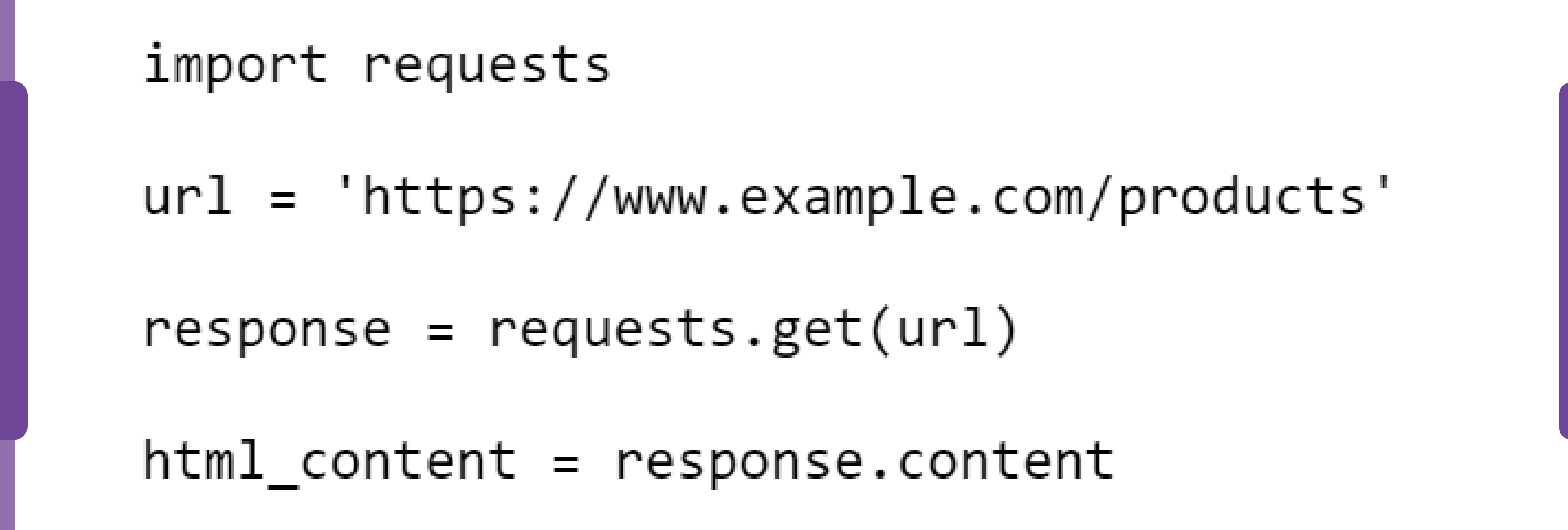

2. Sending HTTP Requests

Use the requests library to send HTTP requests and retrieve the HTML content.

3. Parsing HTML Content

Use BeautifulSoup to parse the HTML and extract the data.

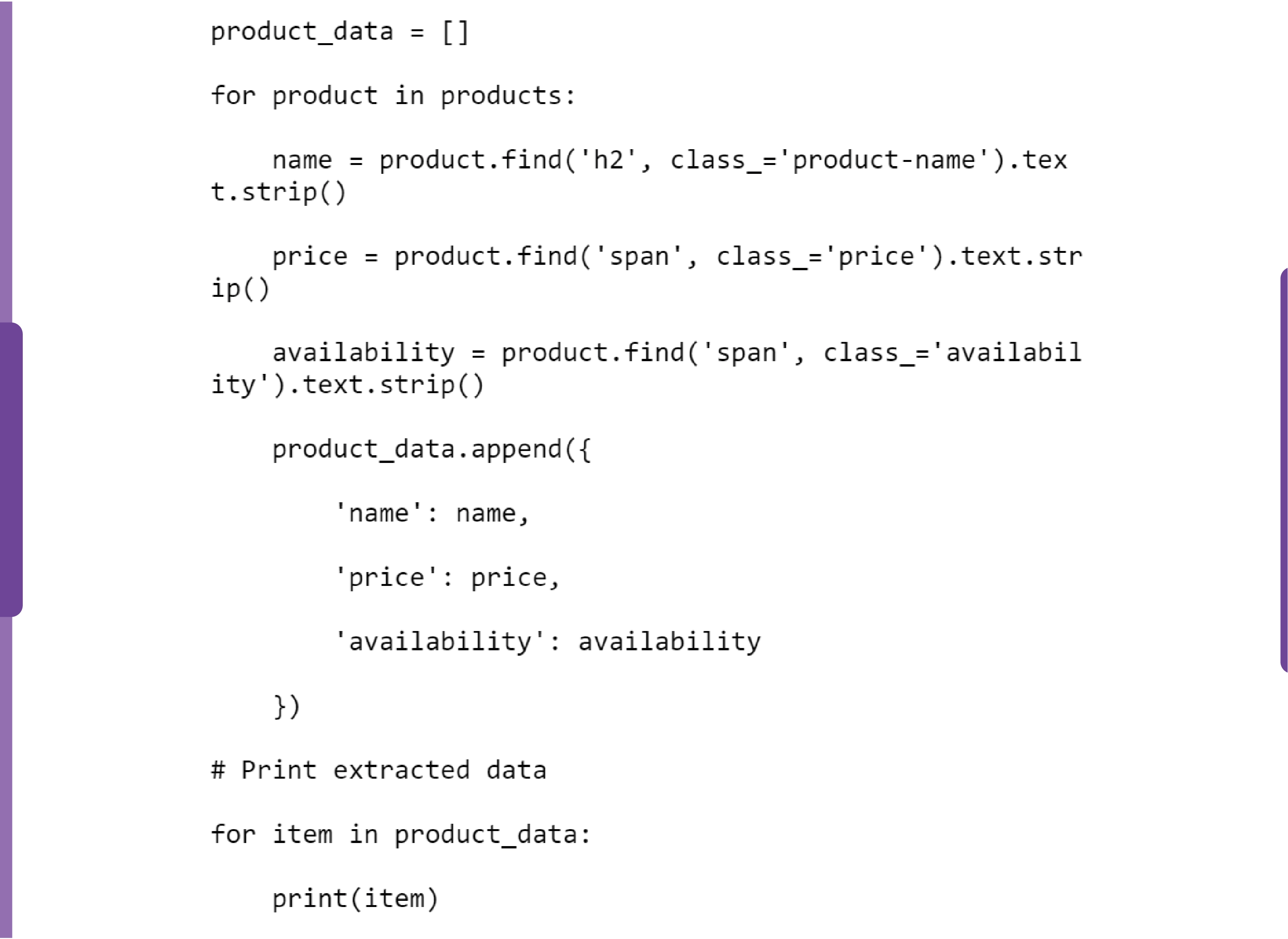

4. Extracting Data

Loop through the product elements and extract details such as name, price, and availability.

Scraping Dynamic Product Catalog Data

Dynamic content generated by JavaScript requires a different approach using Selenium.

Step-by-Step Guide

1. Setting Up Selenium

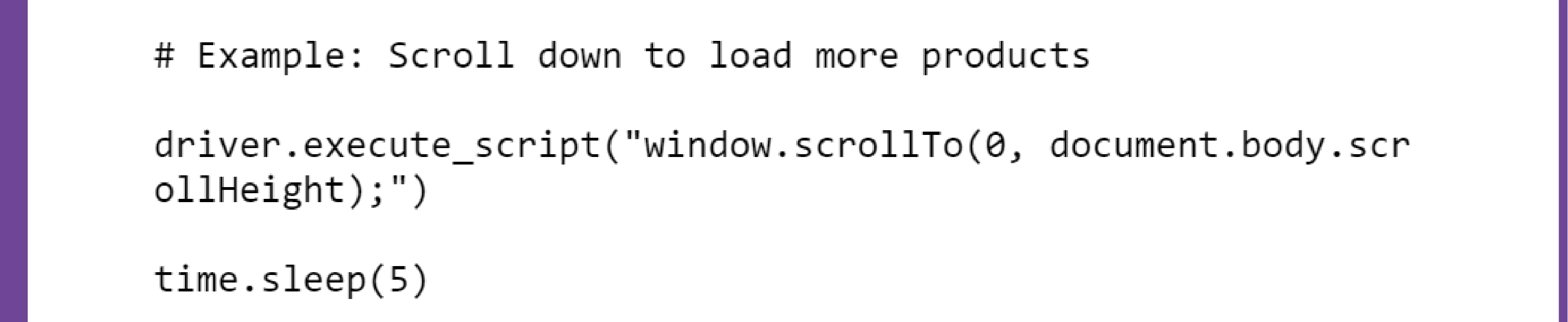

2. Interacting with the Page

Use Selenium to interact with the page, such as scrolling or clicking buttons to load more products.

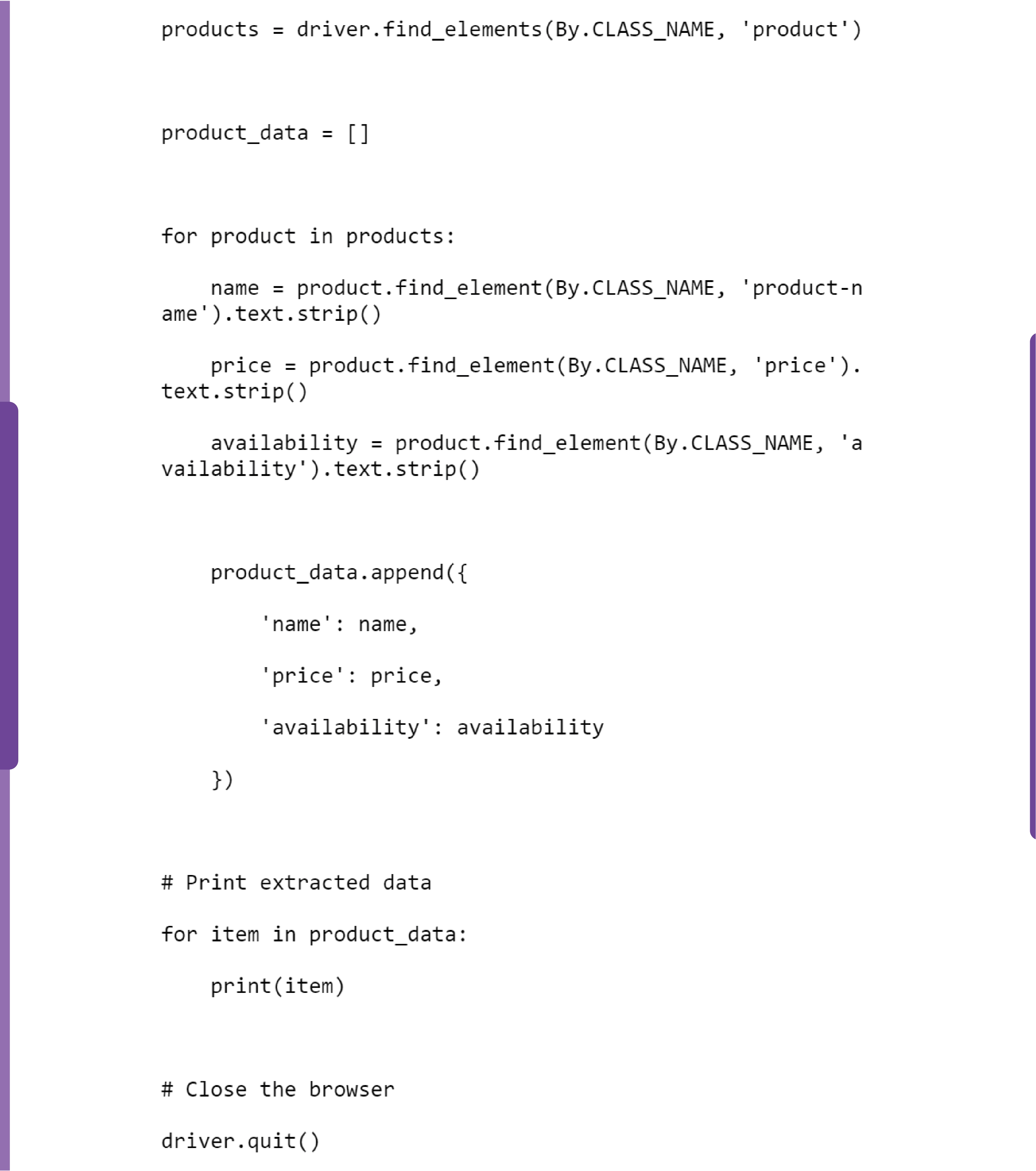

3. Extracting Data

Locate and extract the product details using Selenium.

Storing the Extracted Data

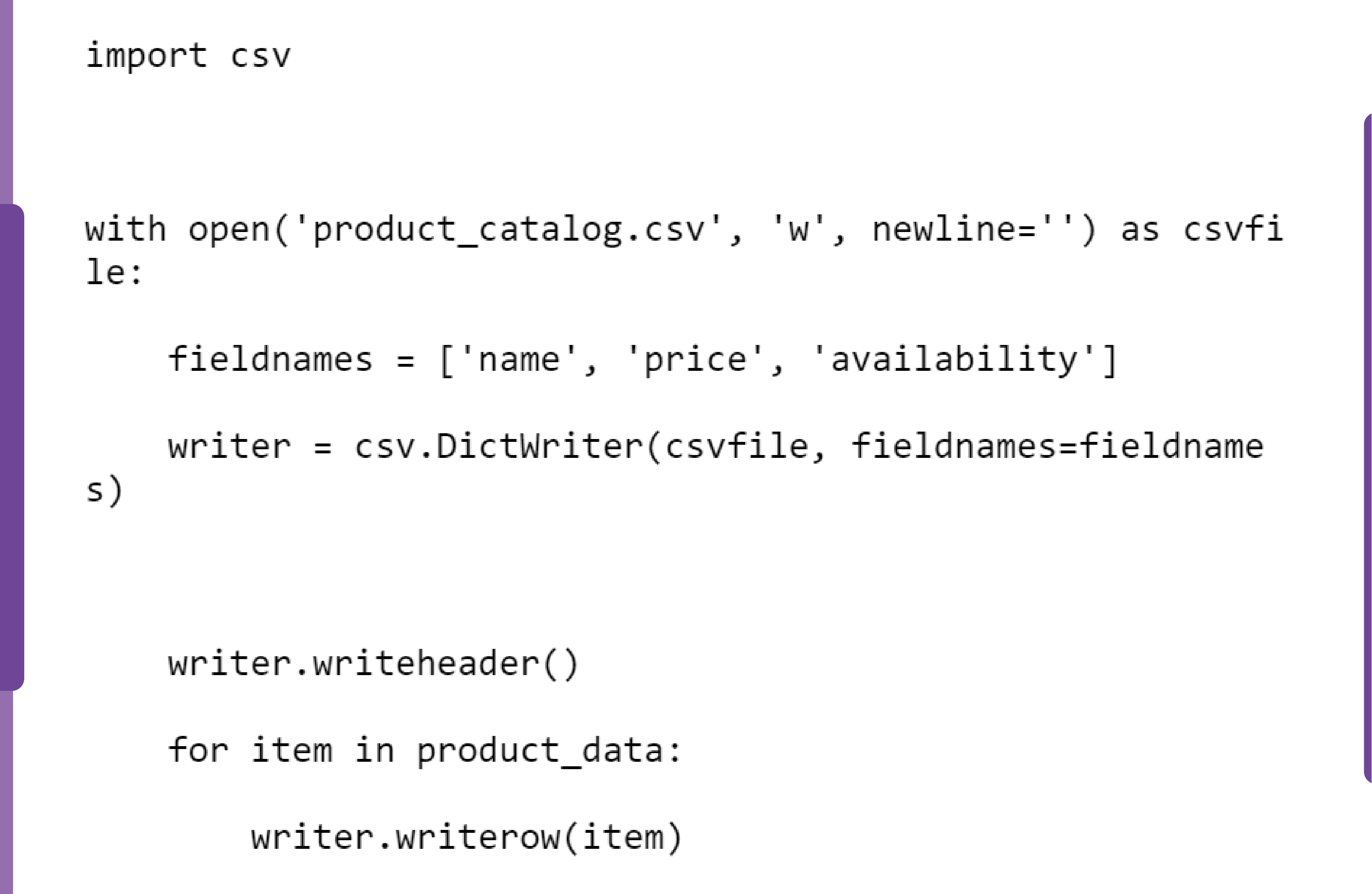

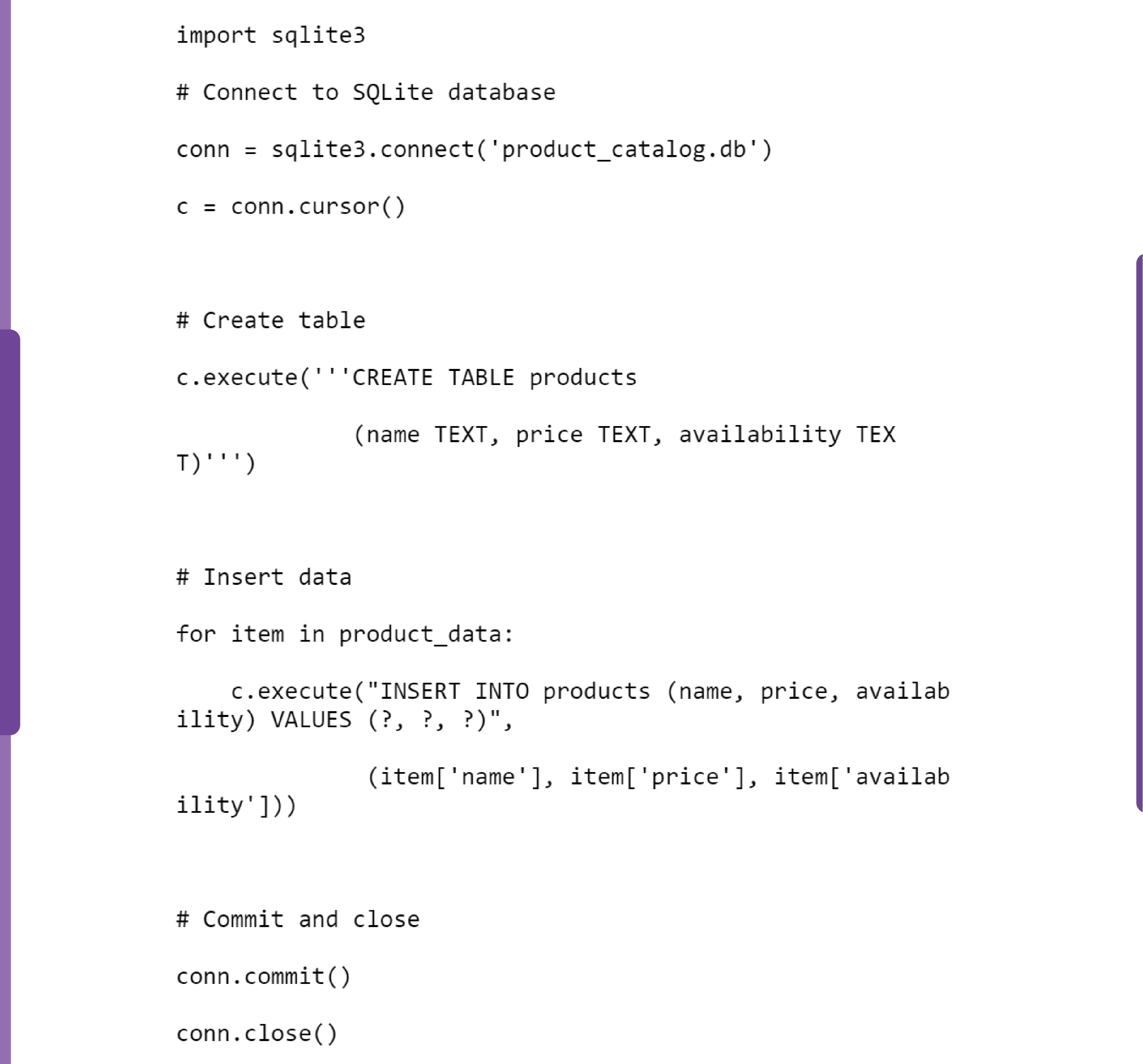

Once you have extracted the data, you need to store it in a structured format for further analysis. You can use a database like SQLite or a simple CSV file.

Storing in CSV

Storing in SQLite

Conclusion

To scrape product catalog data offers immense potential for optimizing e-commerce operations. From market research and price comparison to inventory management and customer experience enhancement, the insights gained from scraped data can drive strategic decision-making and improve business outcomes. By leveraging the right tools and techniques, businesses can harness the power of e-commerce data scraping to stay competitive and responsive in a dynamic market.

Real Data API is here to help you navigate the complexities of product catalog data scraping. With our expertise in web data services and instant data scraper solutions, we can empower your e-commerce business with actionable insights and streamlined data management. Contact us today to start transforming your e-commerce operations with advanced data scraping techniques!

Know More: https://www.realdataapi.com/optimize-ecommerce-operations-with-product-catalog-data-scraping.php