Extract GrabFood Delivery Websites for Manila Location – A Detailed Guide

Introduction

In the rapidly evolving landscape of food delivery services, understanding local market dynamics is crucial for businesses aiming to optimize their strategies and enhance customer experiences. GrabFood, a leading player in the industry of food delivery, operates extensively in Manila, making it a prime target for data extraction efforts. This blog will guide you through the process to extract GrabFood delivery websites specifically for the Manila location, exploring tools, techniques, and best practices to help you leverage this valuable information.

Why Extract GrabFood Delivery Websites Data?

To scrape GrabFood delivery websites provides a wealth of insights that are crucial for businesses aiming to excel in the competitive food delivery industry. Here’s why GrabFood delivery websites scraping is so valuable:

Market Insights: Through extracting GrabFood delivery sites, businesses gain access to comprehensive data on restaurant offerings, customer reviews, and pricing strategies. This helps in understanding market trends, identifying popular restaurants, and assessing consumer preferences. Such insights are essential for making informed decisions and adapting strategies to meet market demands.

Competitive Analysis: Extracting GrabFood delivery sites data enables businesses to benchmark their performance against competitors. By analyzing data on delivery times, pricing, and menu items, you can identify gaps in your service and opportunities for improvement. This competitive intelligence is vital for staying ahead in a crowded market.

Customer Feedback: Access to customer reviews and ratings through GrabFood delivery websites collection allows businesses to understand customer satisfaction levels and pinpoint areas for enhancement. Addressing common complaints and leveraging positive feedback can significantly improve your service and boost customer loyalty.

Demand Forecasting: Data on delivery volumes and peak times helps in forecasting demand and optimizing operational efficiency. By analyzing trends in ordering patterns, businesses can better manage inventory, staffing levels, and marketing efforts, ensuring they are well-prepared for fluctuations in demand.

Strategic Planning: GrabFood delivery websites extraction provides valuable information that aids in strategic planning. From adjusting marketing strategies to developing new product offerings, the data collected can drive business growth and enhance competitive positioning.

Scraping GrabFood delivery websites offers critical insights into market dynamics, competitive landscape, and customer preferences. Leveraging this data allows businesses to make data-driven decisions, improve service quality, and maintain a competitive edge in the evolving food delivery sector.

Tools and Techniques for Extracting GrabFood Data

1. Choosing the Right Tools

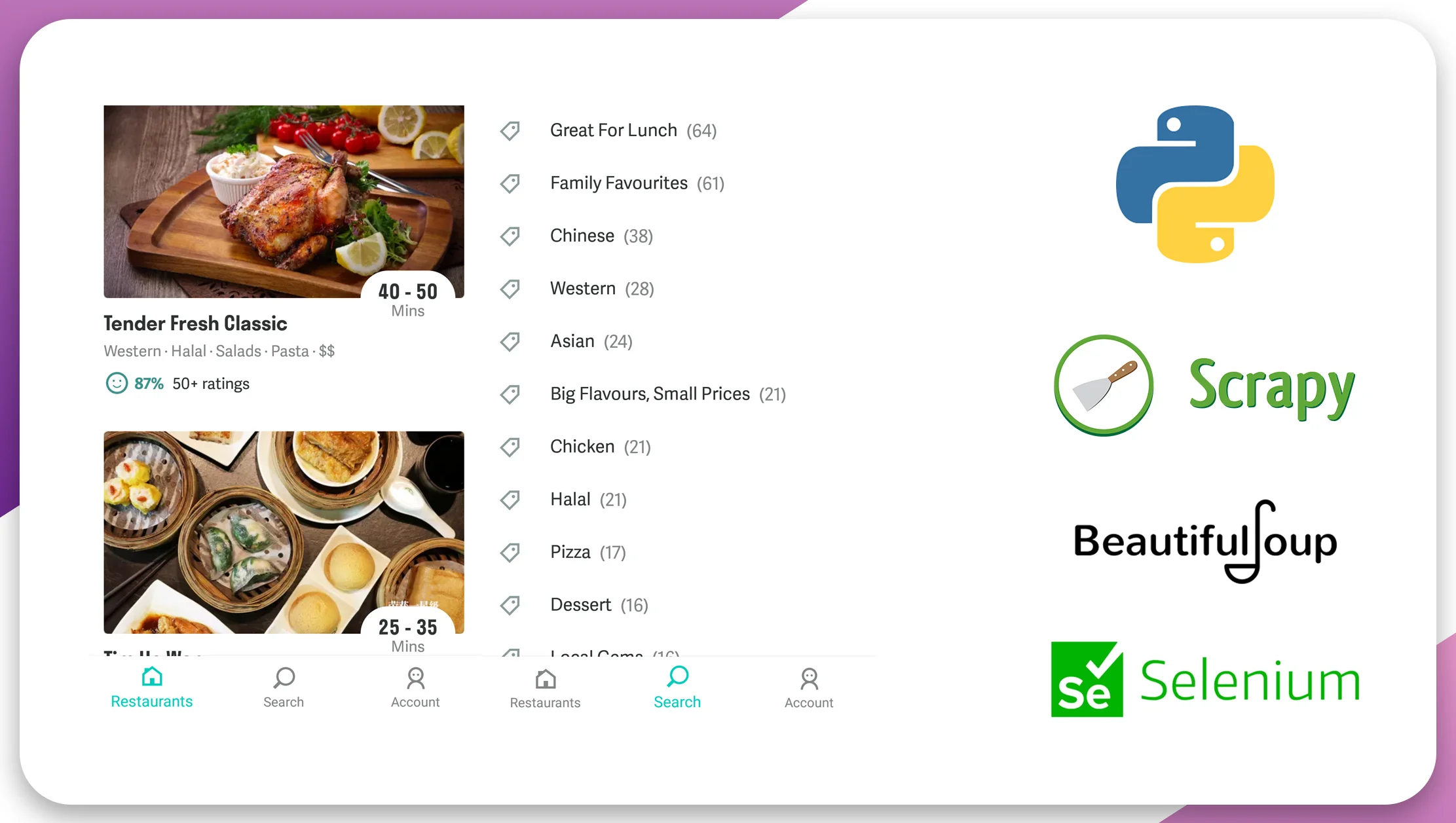

For effective data extraction using GrabFood delivery websites, selecting the right tools is crucial. Here are some commonly used tools:

Python Libraries: Python is a popular choice for web scraping due to its extensive libraries. BeautifulSoup and Scrapy are commonly used for extracting data from HTML, while Selenium helps in handling dynamic content.

Web Scraping Tools: Tools like Octoparse, ParseHub, and Import.io provide user-friendly interfaces for setting up web scraping projects without extensive coding.

APIs: Although GrabFood does not officially offer a public API for general data extraction, APIs from third-party services or similar platforms can sometimes be used to gather relevant data.

2. Using Python Libraries

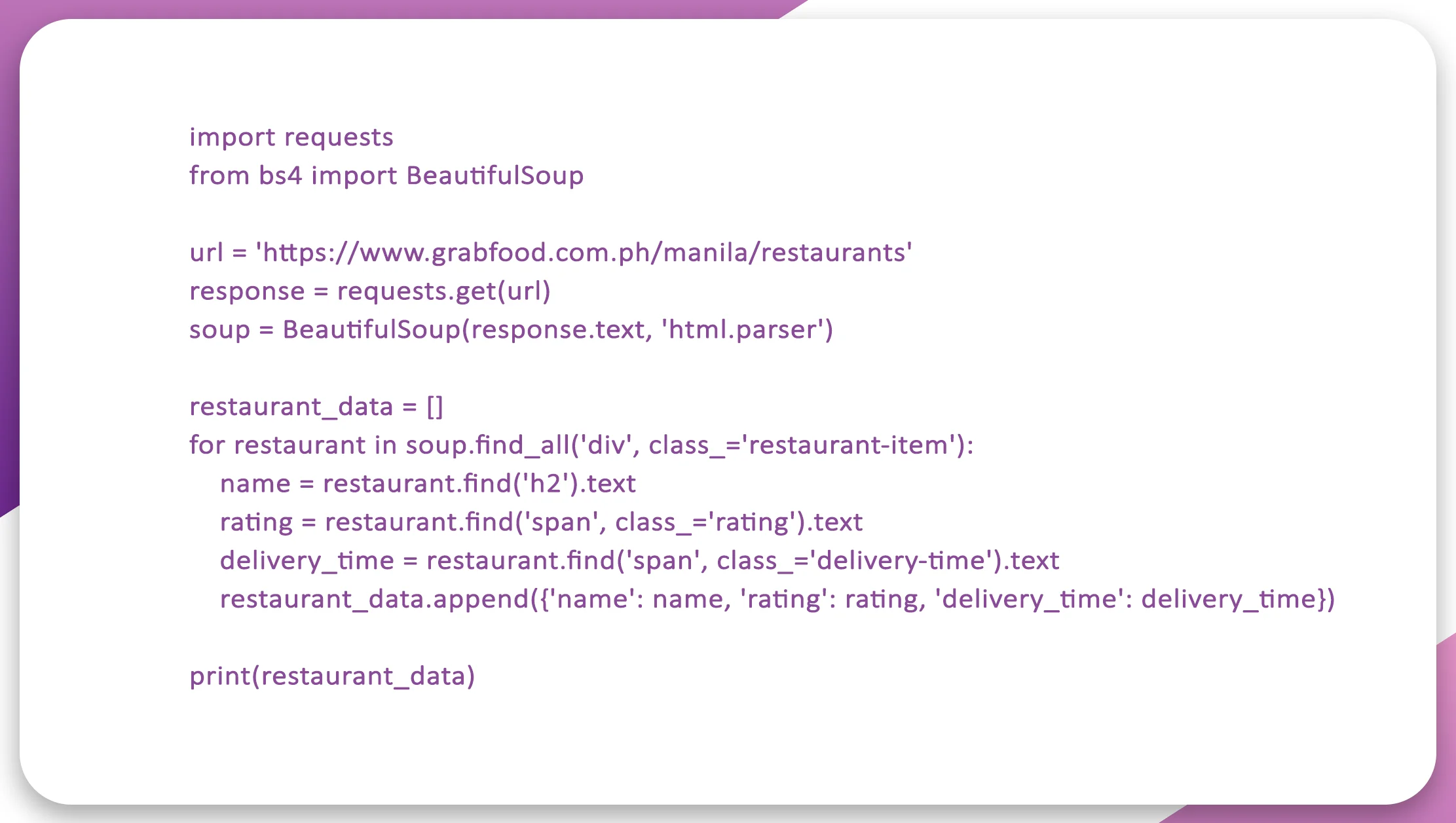

BeautifulSoup and Requests

For static web pages, BeautifulSoup combined with Requests is a straightforward solution:

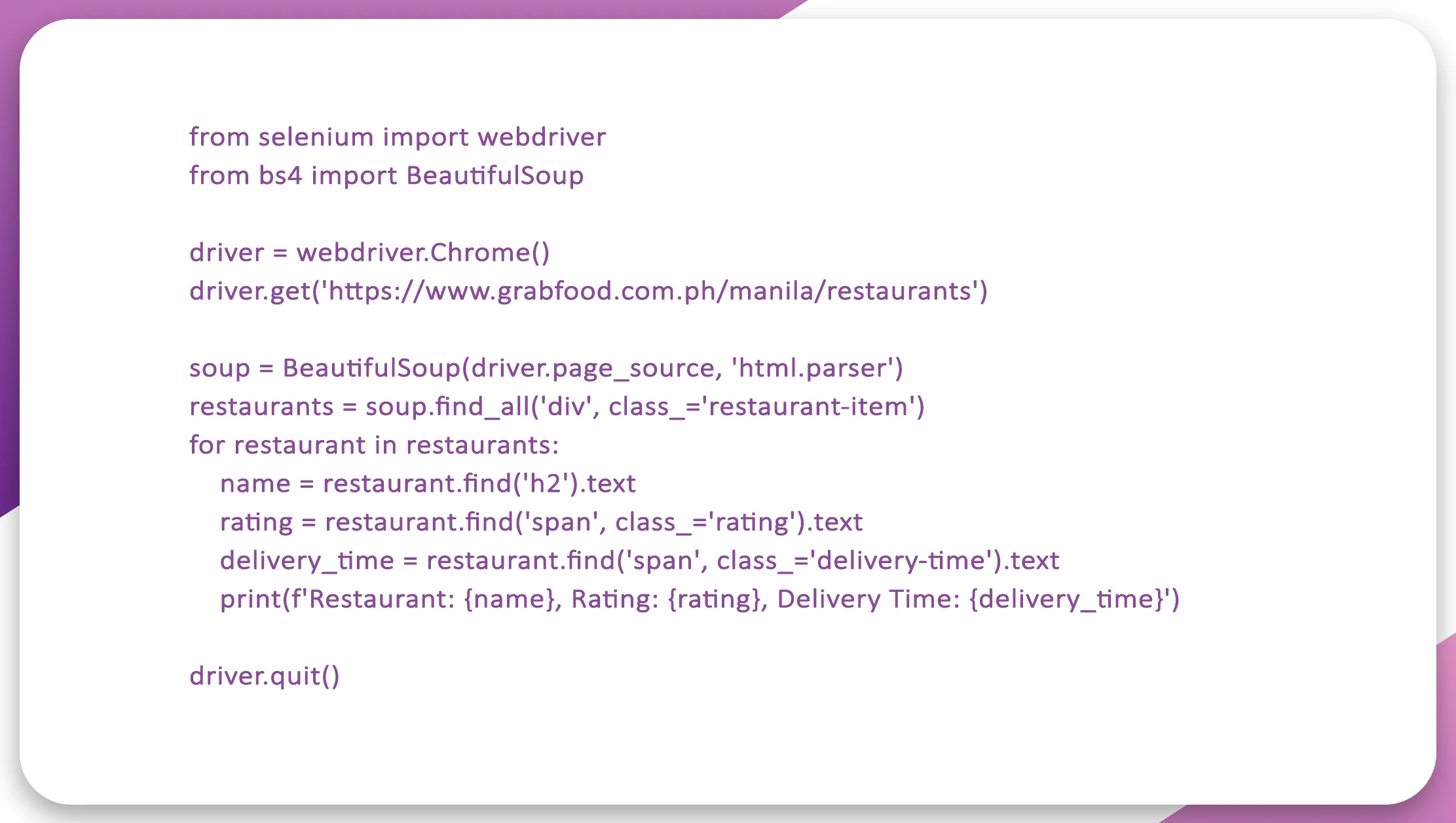

Selenium for Dynamic Content

For dynamic pages that load content via JavaScript, Selenium is a powerful tool:

3. Handling Dynamic and Interactive Elements

Many delivery websites use JavaScript to load content dynamically. For these cases:

Use Headless Browsers: Tools like Selenium with headless browser modes (e.g., Chrome Headless) allow you to run the browser in the background, improving efficiency.

Simulate User Interactions: Automate actions such as scrolling or clicking to load additional data that is not immediately visible.

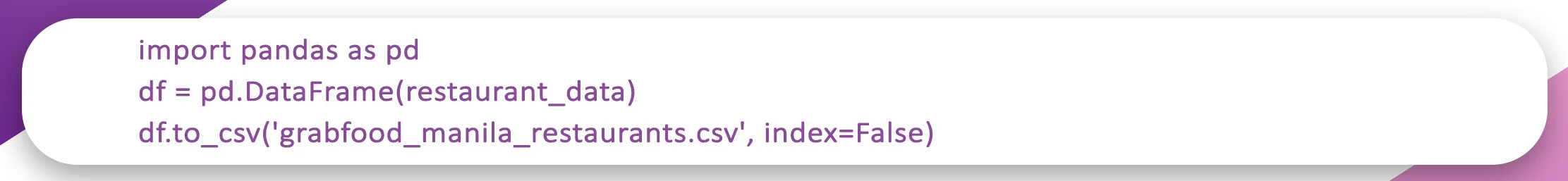

4. Data Structuring and Storage

After scraping, structuring and storing data efficiently is essential:

Database Storage: Use databases like MySQL, PostgreSQL, or MongoDB to store large volumes of data. This facilitates easy querying and analysis.

CSV Files: For smaller datasets or initial analysis, saving data in CSV format can be a simple solution.

Legal and Ethical Considerations

1. Adhering to Terms of Service

Before scraping data, review the terms of service of GrabFood and any other target websites. Ensure that your scraping activities comply with their policies to avoid legal issues.

2. Respecting Data Privacy

Avoid scraping personally identifiable information (PII) or any sensitive data. Focus on aggregated data and avoid infringing on user privacy.

3. Ethical Scraping Practices

Rate Limiting: Implement rate limiting to avoid overloading the target website’s servers.

Respect robots.txt: Adhere to the guidelines specified in the robots.txt file of the website.

Practical Applications of Extracted Data

1. Optimizing Marketing Strategies

Analyze customer preferences and popular restaurants to tailor marketing campaigns. Focus on high-demand items and target areas with the most potential.

2. Improving Service Offerings

Use customer feedback and ratings to enhance service quality. Address common complaints and capitalize on positive feedback to improve overall customer satisfaction.

3. Operational Efficiency

Leverage data on delivery times and order volumes to optimize delivery logistics. Manage staffing levels and inventory to align with demand patterns.

4. Competitive Analysis

Monitor competitor pricing, menu offerings, and promotions to adjust your strategies. Stay ahead of the competition by offering unique value propositions and superior service.

Conclusion

To extract GrabFood delivery websites for the Manila location offers invaluable insights that can significantly impact your business strategy. By using the right tools and techniques for scraping GrabFood delivery websites, you can gain a deep understanding of market dynamics, customer preferences, and competitive landscape.

Whether you’re using Python libraries like BeautifulSoup and Selenium, or leveraging advanced scraping tools, it’s essential to ensure that your data collection practices are ethical and comply with legal standards. Proper handling, storage, and analysis of the data will enable you to make informed decisions, optimize your operations, and enhance your competitive edge.

For expert assistance to extract GrabFood delivery websites and extracting valuable data, partner with Real Data API. Our advanced solutions ensure accurate and reliable data extraction tailored to your needs. Contact us today to start gaining actionable insights and drive your business forward!